The Apple iPad Pro Review

by Ryan Smith, Joshua Ho & Brandon Chester on January 22, 2016 8:10 AM ESTSoC Analysis: On x86 vs ARMv8

Before we get to the benchmarks, I want to spend a bit of time talking about the impact of CPU architectures at a middle degree of technical depth. At a high level, there are a number of peripheral issues when it comes to comparing these two SoCs, such as the quality of their fixed-function blocks. But when you look at what consumes the vast majority of the power, it turns out that the CPU is competing with things like the modem/RF front-end and GPU.

x86-64 ISA registers

Probably the easiest place to start when we’re comparing things like Skylake and Twister is the ISA (instruction set architecture). This subject alone is probably worthy of an article, but the short version for those that aren't really familiar with this topic is that an ISA defines how a processor should behave in response to certain instructions, and how these instructions should be encoded. For example, if you were to add two integers together in the EAX and EDX registers, x86-32 dictates that this would be equivalent to 01d0 in hexadecimal. In response to this instruction, the CPU would add whatever value that was in the EDX register to the value in the EAX register and leave the result in the EDX register.

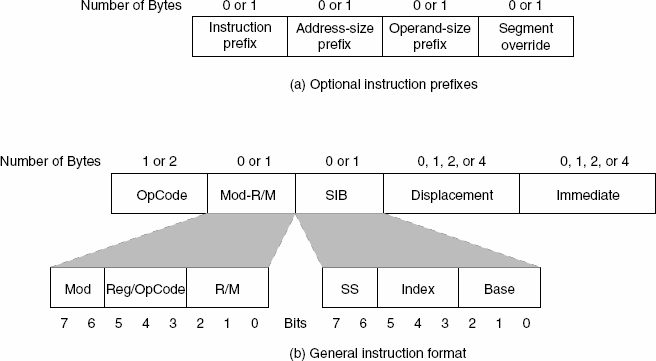

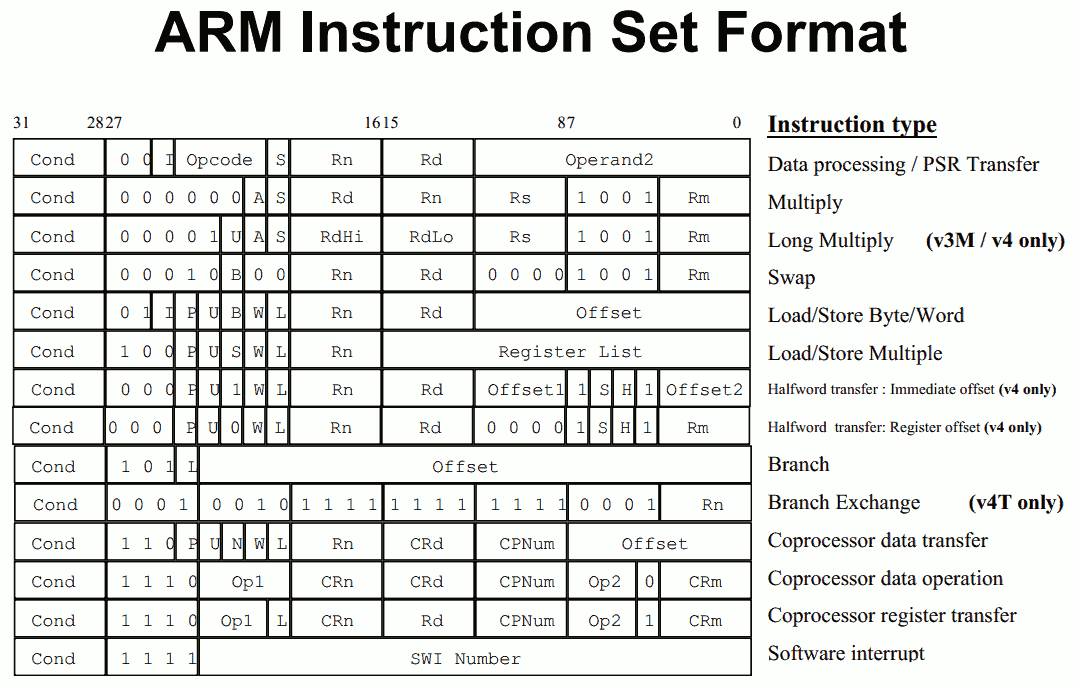

The fundamental difference between x86 and ARM is that x86 is a relatively complex ISA, while ARM is relatively simple by comparison. One key difference is that ARM dictates that every instruction is a fixed number of bits. In the case of ARMv8-A and ARMv7-A, all instructions are 32-bits long unless you're in thumb mode, which means that all instructions are 16-bit long, but the same sort of trade-offs that come from a fixed length instruction encoding still apply. Thumb-2 is a variable length ISA, so in some sense the same trade-offs apply. It’s important to make a distinction between instruction and data here, because even though AArch64 uses 32-bit instructions the register width is 64 bits, which is what determines things like how much memory can be addressed and the range of values that a single register can hold. By comparison, Intel’s x86 ISA has variable length instructions. In both x86-32 and x86-64/AMD64, each instruction can range anywhere from 8 to 120 bits long depending upon how the instruction is encoded.

At this point, it might be evident that on the implementation side of things, a decoder for x86 instructions is going to be more complex. For a CPU implementing the ARM ISA, because the instructions are of a fixed length the decoder simply reads instructions 2 or 4 bytes at a time. On the other hand, a CPU implementing the x86 ISA would have to determine how many bytes to pull in at a time for an instruction based upon the preceding bytes.

A57 Front-End Decode, Note the lack of uop cache

While it might sound like the x86 ISA is just clearly at a disadvantage here, it’s important to avoid oversimplifying the problem. Although the decoder of an ARM CPU already knows how many bytes it needs to pull in at a time, this inherently means that unless all 2 or 4 bytes of the instruction are used, each instruction contains wasted bits. While it may not seem like a big deal to “waste” a byte here and there, this can actually become a significant bottleneck in how quickly instructions can get from the L1 instruction cache to the front-end instruction decoder of the CPU. The major issue here is that due to RC delay in the metal wire interconnects of a chip, increasing the size of an instruction cache inherently increases the number of cycles that it takes for an instruction to get from the L1 cache to the instruction decoder on the CPU. If a cache doesn’t have the instruction that you need, it could take hundreds of cycles for it to arrive from main memory.

Of course, there are other issues worth considering. For example, in the case of x86, the instructions themselves can be incredibly complex. One of the simplest cases of this is just some cases of the add instruction, where you can have either a source or destination be in memory, although both source and destination cannot be in memory. An example of this might be addq (%rax,%rbx,2), %rdx, which could take 5 CPU cycles to happen in something like Skylake. Of course, pipelining and other tricks can make the throughput of such instructions much higher but that's another topic that can't be properly addressed within the scope of this article.

By comparison, the ARM ISA has no direct equivalent to this instruction. Looking at our example of an add instruction, ARM would require a load instruction before the add instruction. This has two notable implications. The first is that this once again is an advantage for an x86 CPU in terms of instruction density because fewer bits are needed to express a single instruction. The second is that for a “pure” CISC CPU you now have a barrier for a number of performance and power optimizations as any instruction dependent upon the result from the current instruction wouldn’t be able to be pipelined or executed in parallel.

The final issue here is that x86 just has an enormous number of instructions that have to be supported due to backwards compatibility. Part of the reason why x86 became so dominant in the market was that code compiled for the original Intel 8086 would work with any future x86 CPU, but the original 8086 didn’t even have memory protection. As a result, all x86 CPUs made today still have to start in real mode and support the original 16-bit registers and instructions, in addition to 32-bit and 64-bit registers and instructions. Of course, to run a program in 8086 mode is a non-trivial task, but even in the x86-64 ISA it isn't unusual to see instructions that are identical to the x86-32 equivalent. By comparison, ARMv8 is designed such that you can only execute ARMv7 or AArch32 code across exception boundaries, so practically programs are only going to run one type of code or the other.

Back in the 1980s up to the 1990s, this became one of the major reasons why RISC was rapidly becoming dominant as CISC ISAs like x86 ended up creating CPUs that generally used more power and die area for the same performance. However, today ISA is basically irrelevant to the discussion due to a number of factors. The first is that beginning with the Intel Pentium Pro and AMD K5, x86 CPUs were really RISC CPU cores with microcode or some other logic to translate x86 CPU instructions to the internal RISC CPU instructions. The second is that decoding of these instructions has been increasingly optimized around only a few instructions that are commonly used by compilers, which makes the x86 ISA practically less complex than what the standard might suggest. The final change here has been that ARM and other RISC ISAs have gotten increasingly complex as well, as it became necessary to enable instructions that support floating point math, SIMD operations, CPU virtualization, and cryptography. As a result, the RISC/CISC distinction is mostly irrelevant when it comes to discussions of power efficiency and performance as microarchitecture is really the main factor at play now.

408 Comments

View All Comments

tim851 - Friday, January 22, 2016 - link

"Pro" is just a marketing moniker. There are smartphones that carry it.Apple wants iOS to succeed. People wonder if OSX will come to the iPad, I think Apple would rather consider bringing iOS to Macs. They are fanatical about simplicity and an iPad with iOS got that in spades.

And that's why they are taking the opposite approach of Microsoft.

Microsoft is trying to make their desktop OS touch-friendly enough. Apple is trying to make their touch OS productive enough.

Windows devs are by and large ignoring Metro, the tough UI, and just deploy desktop apps. Apple wants to force devs to find ways to bring professional grade software to iOS.

I'm quite happy that the two companies are exploring different avenues instead of racing into the same direction.

ddriver - Friday, January 22, 2016 - link

"I'm quite happy"People should really have higher standards of expectations, because otherwise, the industry will take its sweet time milking them and barely making any increments in the value and capabilities of their products. They won't make it better until people demand better, the industry is currently in a sweet spot where it gets to dictate demand, by lowering people's expectations to the point they don't know and can't even imagine any better than what the industry makes.

People should stop following the trends dictated by the industry, and really should look beyond that, which the industry is willing to do at this point, towards what is now possible to do and has been for a while really. Because otherwise, no matter how much technology progresses, this will not be reflected by the capabilities of people, if it is up to the industry, it will keep putting that into almost useless shiny toys rather than the productivity tools they could be.

exanimo - Monday, January 25, 2016 - link

ddriver, I want to start out by commending you on your writing and ideas. Top notch, really.I also really enjoy your idealist approach to saying that people should be dictating the industry, rather than vice versa (seriously). My only question is how can one do that as a consumer? Is seems to me that we have little or no choice but to follow trends because Google, Apple, and Microsoft are becoming too big to fail.

A perfect anecdote would be BlackBerry's OS10. They came late to the show (after they realized you can be too big to fail when you become stagnant) and released a technically superior mobile OS that had the consistency and reliability of iOS, with the control and versatility of Android. On top of that was the use of gestures and an amalgamated hub for messages. I wish I had a choice to use this operating system, but the writing on the wall says that it will collapse within the next 2 years. This is because they're still losing market shares and people are not supporting applications.

There is innovation, but it's stomped out by these huge companies and THE PEOPLE that dictate which OS to develop for.

The Hardcard - Friday, January 22, 2016 - link

What software do you use that came out in 1981 when the PC launched. Probably none. Virtually guaranteed none. It is surprising the lack of forward vision sometimes. In five years there will be plenty of professional software on iOS, to run on the significantly more powerful iPad Pro Whatever. The writing is on the wall.ddriver - Friday, January 22, 2016 - link

There were barely any software development tools back then, and barely any software developers for that matter. Today there is plenty of software development tools, and plenty of software developers, plus mobile devices have been around for a while. Yet none of those seems to produce any professional software, despite all the time and the fact the hardware is good enough. As I said earlier, this is entirely due to the philosophy, advocated for mobile devices - those should not be tools for consumers to use, but tools through which the consumers are being used. This market was inventing for milking people, not for making them more capable and productive.andrewaggb - Friday, January 22, 2016 - link

I think it really goes back to what a person needs to be productive. For some people that is just a web browser (eg chromebook). I have no doubt that the iPad pro may be productive for some people/uses and be everything they need in a computing device.In my case, as a windows/linux/web software developer I need a windows machine (or vm), with visual studio, sql server, eclipse, postgres, ms office, and various supporting apps. For me a chromebook or ipad is not a pro device or really even useful. I have various co-workers with SP3/4's + dock that drive dual screens and peripherals and get by ok. I like to run vm's and various other things that cause 16gb of ram to not be enough, so I'm stuck in desktop/premium laptop territory. I really don't mind that.

Personally - I barely use my ipad air and ended up installing crouton (ubuntu) on the chromebook. I'm sure other people are different.

Different devices for different kinds of professionals.

lilmoe - Friday, January 22, 2016 - link

Your point?$1000 laptops (even from Apple) are MUCH more powerful already, and they will get even more powerful. Same can be said about $1000 Windows 10 tablets. Technology will always progress, this isn't restricted to iPads.

Why is everyone trying to make iOS for professional productivity a thing? Why torture ourselves? Do you guys really believe it's only about computing power, which by the way isn't nearly close to being adequate? Good luck moving that 200GB RAW 4K video clip on that thing, let alone edit it. Good luck using it for 3D modelling and engineering. Good luck writing and compiling software...

As pointless as the new Macbook was, it sure as heck is a lot better than this thing for what it's advertised for...

This is an accessory, NOT a pro product. "The writing is on the wall"...................

ddriver - Friday, January 22, 2016 - link

"Why is everyone trying to make iOS for professional productivity a thing?"You ENTIRELY miss the point, which is "why is NOBODY doing it". It is a computer, REDUCED to an accessory, which COULD be THAT MUCH MORE USEFUL.

Actually, using OpenCL even mobile hardware can process high resolution video faster than a good video workstation was capable not 5 years ago. The hardware is perfectly capable of audio, video editing, 3d modelling, graphics, engineering, software development and whatnot. It is not as fast as the fastest desktop workstation, but it is fast enough to do the job, while still being very portable. All it lacks is the software to do it.

lilmoe - Friday, January 22, 2016 - link

Cool story, nice mood swings, you're amazing. lolBut still. Why torture yourself with iOS running on crippled "hardware", when there are devices that do iPad stuff better than iPads, run desktop class OSs and already have the software you need for the engineering and productivity stuff.

Because buying multiple devices to accomplish one task is a better thing to do?

ddriver - Friday, January 22, 2016 - link

What a touching attempt at condescending cynicism. Alas, as always you get things the wrongest way possible. Those capitalized words were not the product of mood, but motivated by your poor cognitive abilities, a last resort attempt at making the painfully obvious a tad more obvious, so that hopefully, you could finally get it. Unfortunately, you seem to be entirely hopeless."Because buying multiple devices to accomplish one task is a better thing to do?"

It is you who advocates such things. My point is exactly that - given the proper software, an ipad would be all that is needed, no need to buy an ipad AND a laptop to get your work done.

And that would be the last set of keystrokes I waste on you. Seriously dude, invest some time in improving yourself.